Korn Seang Edouard Song imagines, designs and creates tools as artistic expressions of potential futures.

Reflecting Pool

A teaching method for university courses powered by students’ critical thinking, rejecting the scripted lecture, for a collaborative process that uses skepticism, doubts, and questions.

2014

Digital application

The importance of critical thinking in STEM subjects (Science, Technology, Engineering and Mathematics) is not reflected by the way they are taught in universities.

Teaching heavily relies on lectures, but these scripted sessions rarely provide students opportunities to think, due to the limited time available and the audience scale. Students usually end up listening passively to the instructors, oftentimes taking notes to stay alert. Their format also did not dramatically varied over the years despite being more technologically-integrated: it remains learning through listening.

Active reading of textbooks and problem solving take up most of the remaining study time, and should give students chances to think. They would ideally try to discuss and assimilate the knowledge before applying it to a few scenarios. In practice, this sequence is often reversed: the set exercises become the base material, relegating the course notes to a mere reference guide. In this case, thinking becomes secondary, and learning is just a matter of memorising and following instructions.

Reflecting Pool is a teaching method for STEM subjects in universities, powered and directed by students’ critical thinking.

Instead of relying on a pre-written scenario, Reflecting Pool uses students’ own questionings, doubts and thoughts as the primary material for transmitting knowledge and understanding.

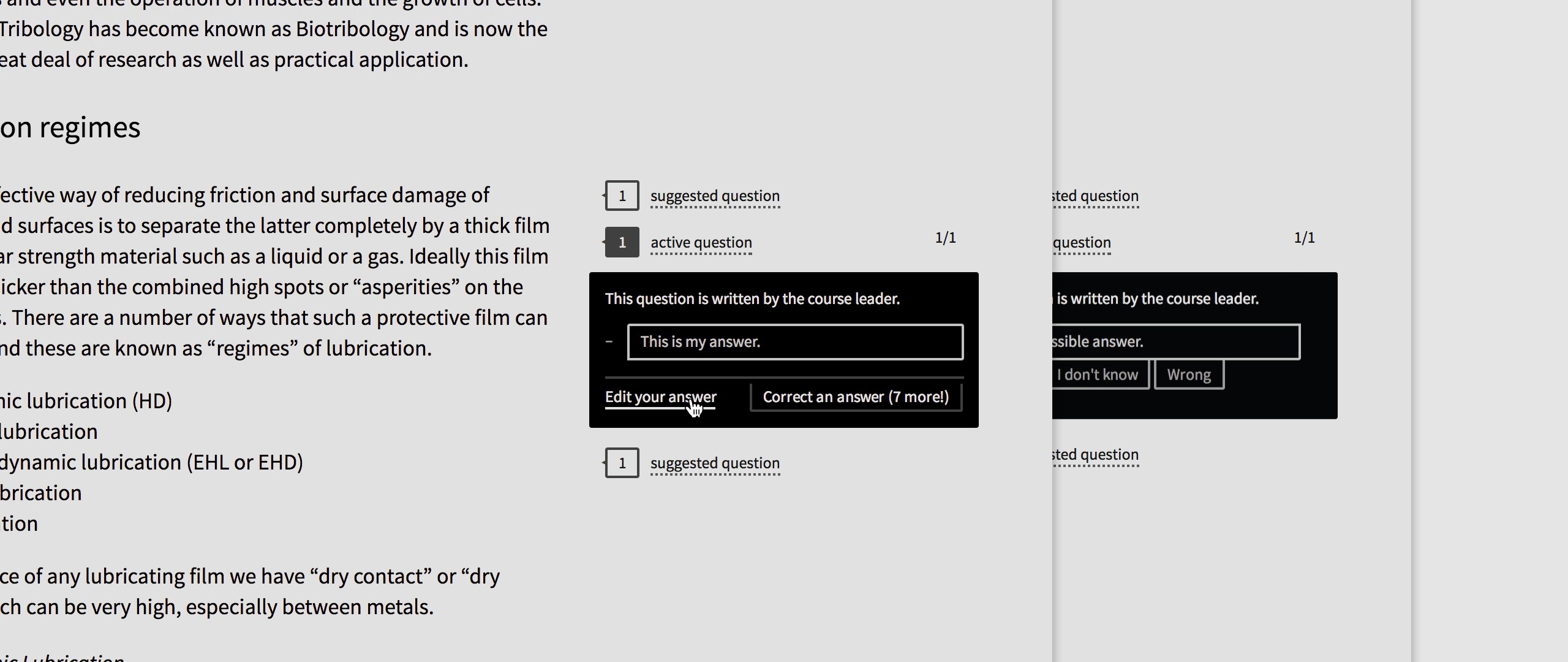

The process begins with an individual and active reading of the course notes, during which students are encouraged to suggest open-ended questions 1 to the class, in an anonymous and shame-free setting.

- 1

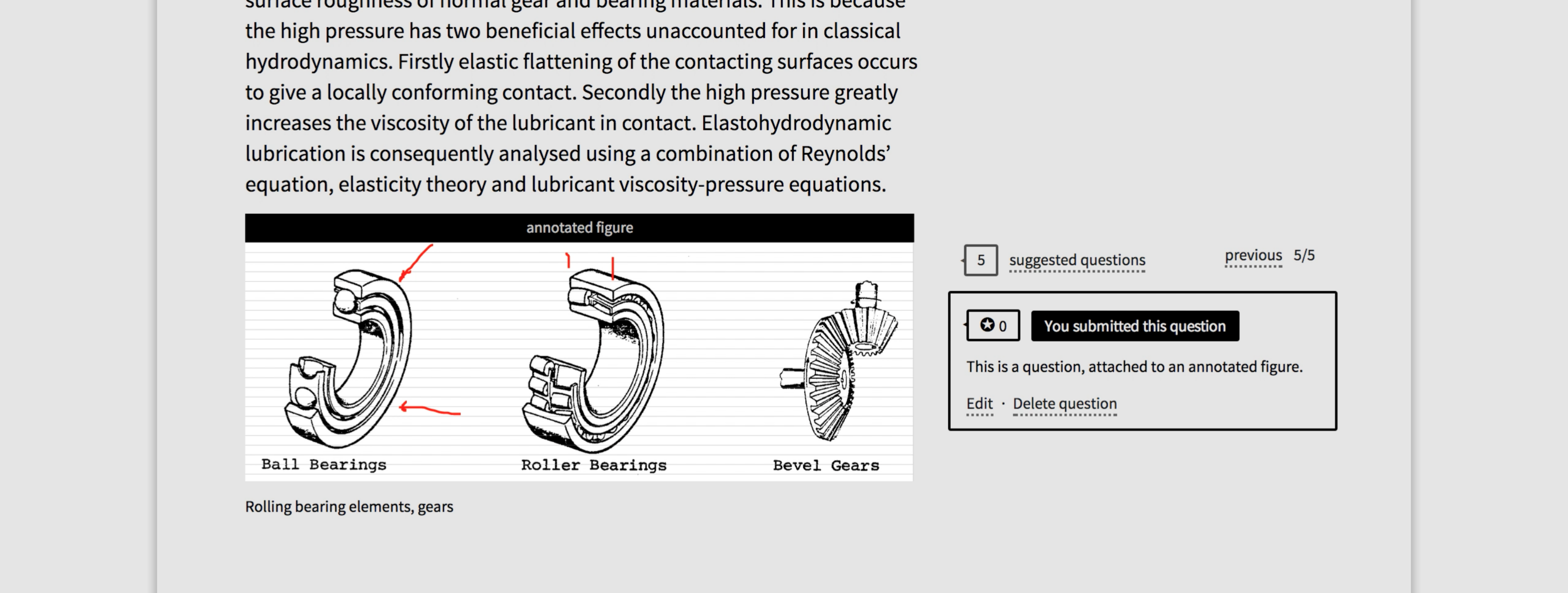

Questions are contextualised: they can refer to a specific chapter, paragraph, or figure. They may even include runtime parameters for an explorable explanation, or annotations for a figure.

Proposing an open-ended question about a given figure from the course notes, with annotations.

The most popular interrogations are promoted, signalling that they can now receive answers. Students are then given a short period to make up their own mind, and write answers to these questions, even if they believe that their solution is flawed.

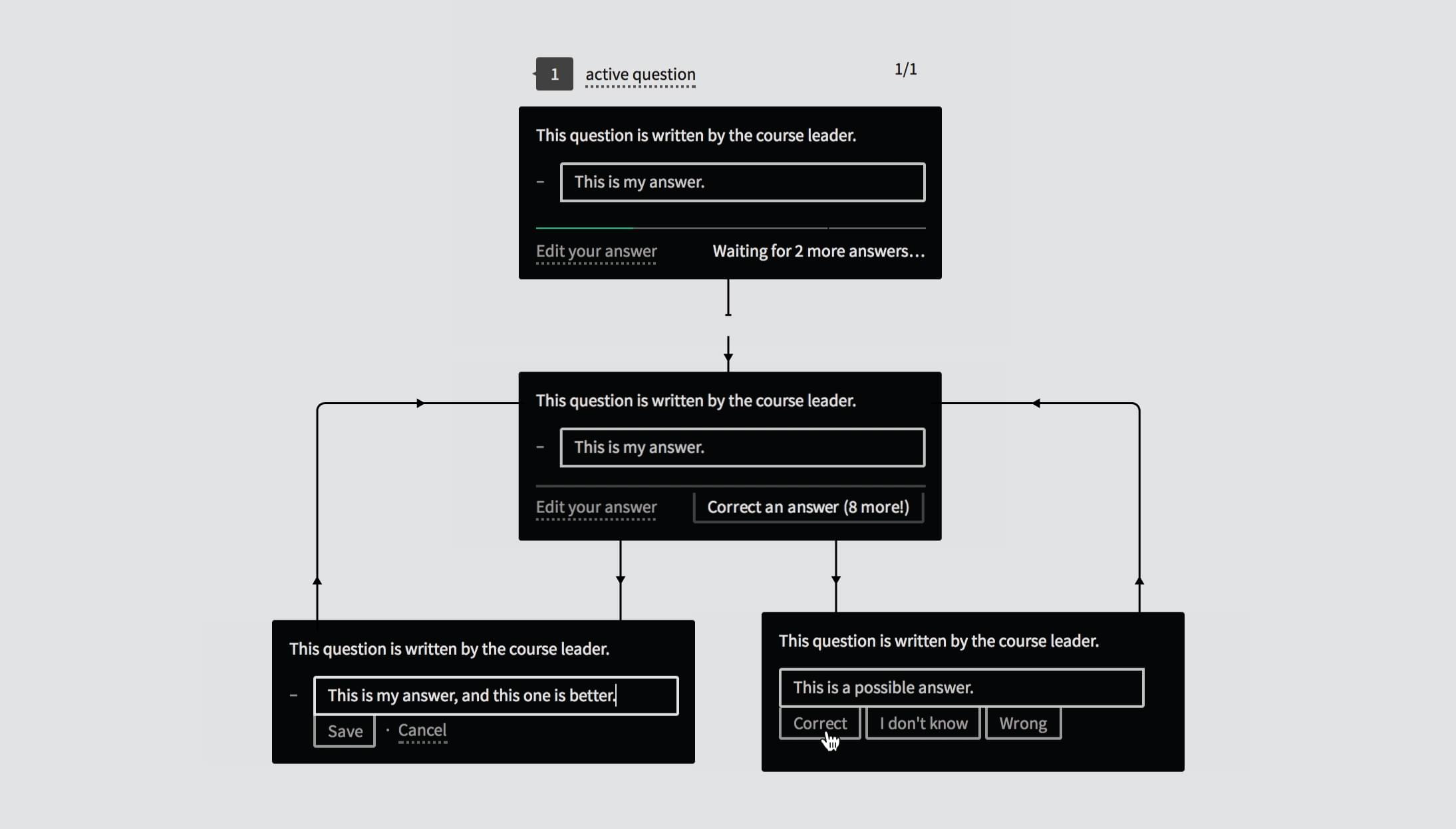

The optimisation phase begins once enough responses have been collected. In this stage, students consecutively review and grade 2 a few answers from the pool, thereby confronting their own thinking to a likely different opinion. They are then given the chance, after each review, to re-consider, and possibly to amend their own answer (without any penalty).

- 2

Grading an answer amounts to answering a simple multiple-choice question: the depicted answer is correct, incorrect, or I don’t know.

Lifecycle of a question: from answering a question, to improving one’s own solution after reviewing and grading other answers from the pool.

Student answers are expected to change throughout the optimisation phase, converging, in the end, to what they confidently think is their most thoughtful and convincing proposition.

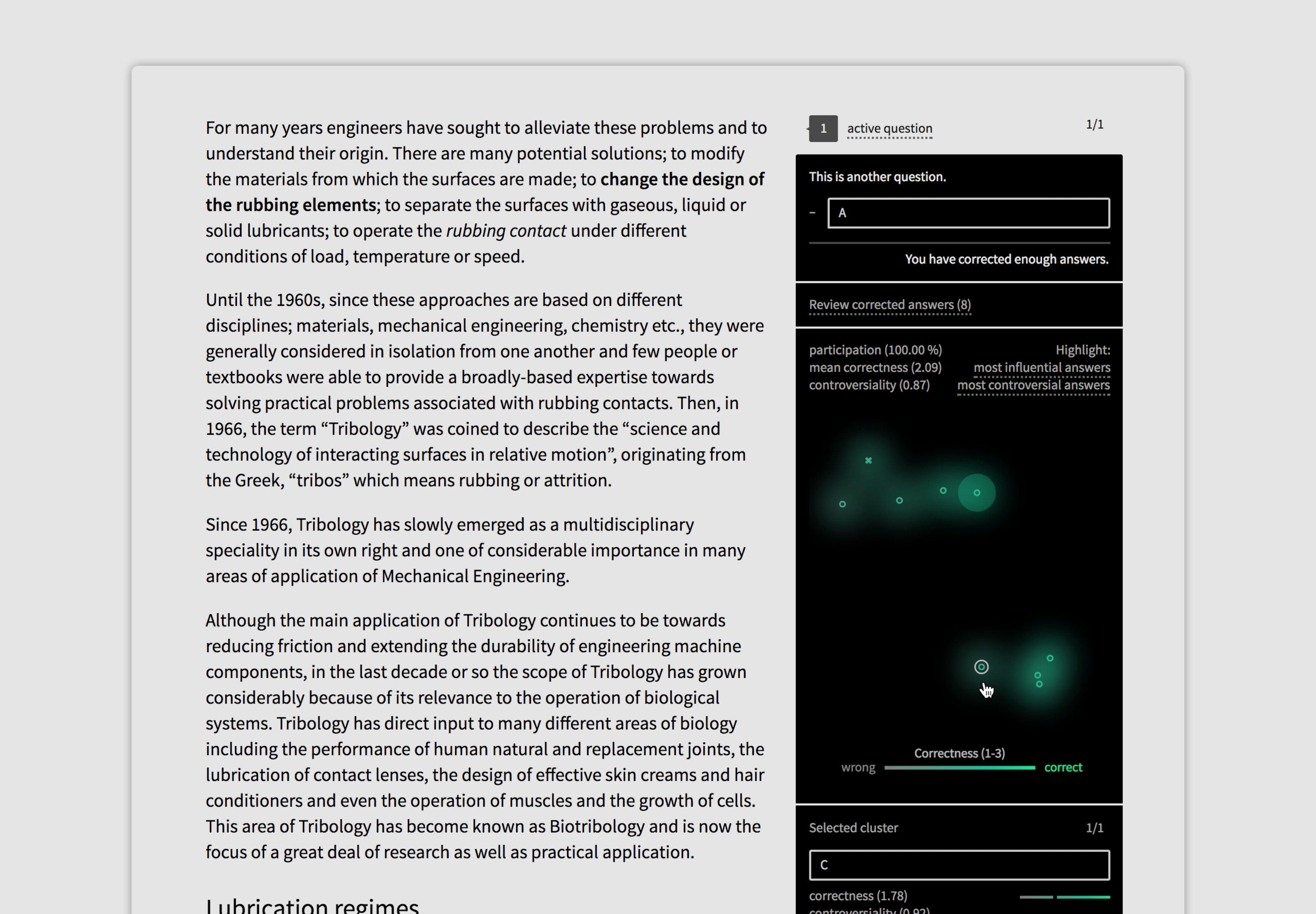

Reflecting Pool gathers, analyses and compiles 3 these answers and grades, into a map which shows how the class, as a unit, answered each of these questions.

- 3

To account for the fact that each student only grades a limited sample of answers, the platform uses a low-rank matrix completion algorithm to extrapolate the missing grades based on an initial group of reviews. This is a well-known method used in recommender systems.

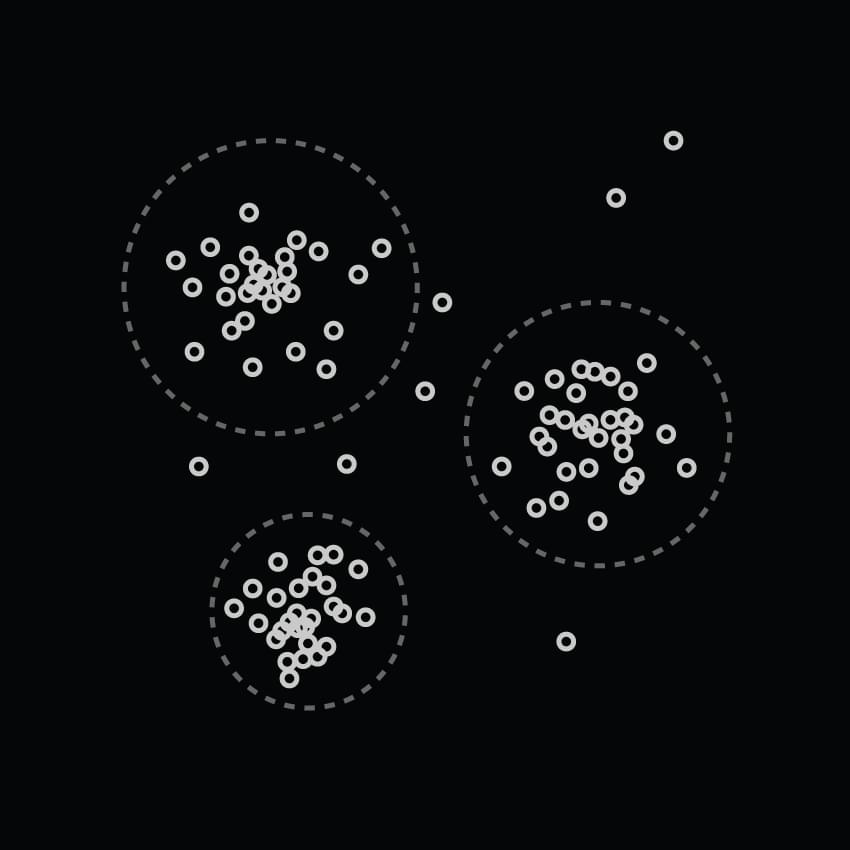

A course leader analysing answers for a given question, finding clusters of similar solutions, to identifying and inspecting controversial or influential answers.

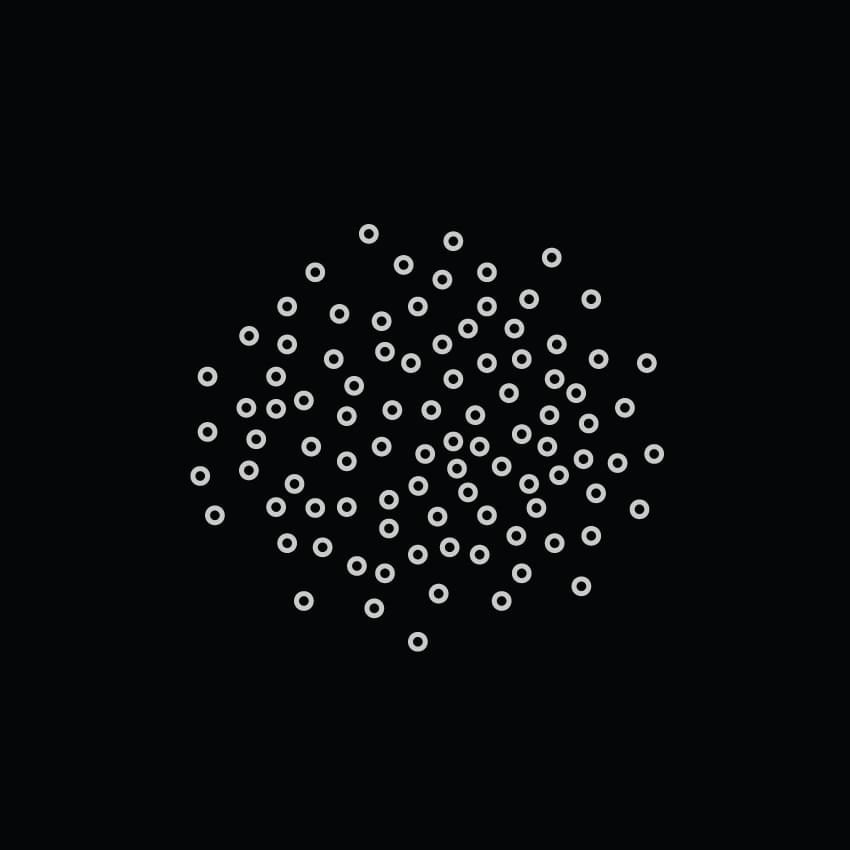

Answers, represented as small pointers, are positioned according to their similarity: two similar answers are shown close to each other, and vice versa. By looking at the map, instructors can therefore identify if a consensus or a dispute existed among the students, and judge, after reviewing a few select answers, whether the underlying concepts were properly assimilated 4. This requires just a few minutes, and does not rely on long examination and correction sessions.

- 4

It is entirely plausible to have students reaching a wrong consensus, meaning that most students agreed on an incorrect answer, often revealing some flaws in their understanding.

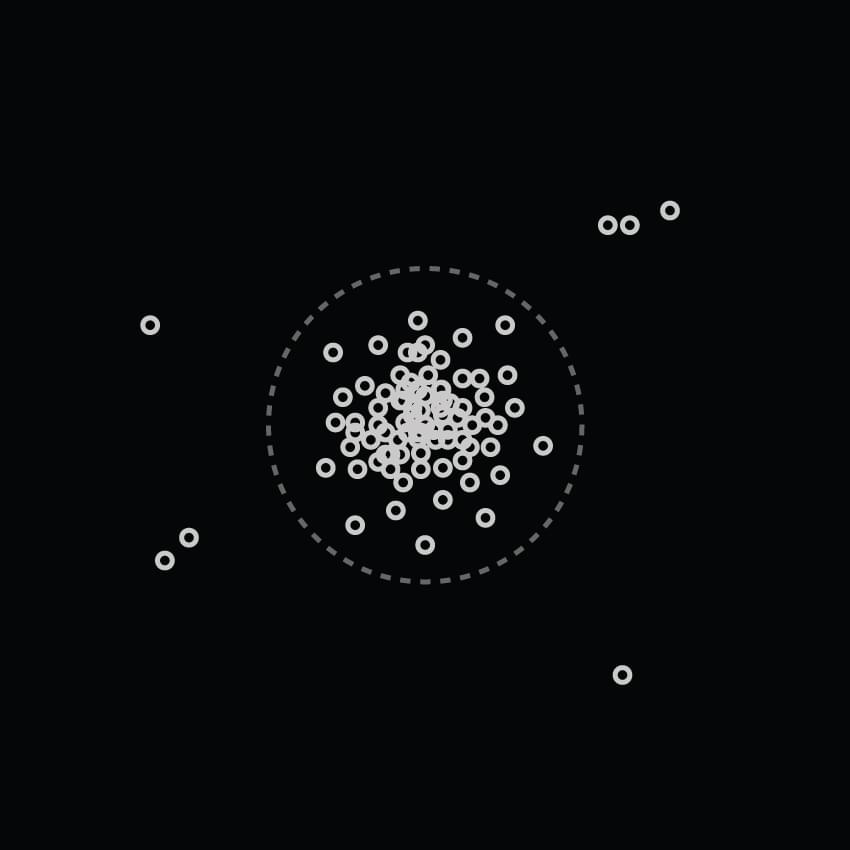

Typical answer distributions: consensus agreeing towards one type of answer (left), clusters of similar answers, with each group disagreeing with each other (center), and chaotic answers where all solutions are contested (right)

From there, any misunderstood concept could be re-explained in more detail, using the gathered data, in dedicated lectures.

Reflecting Pool encourages, by design, students to think critically to learn new concepts, and build understanding.

This process provides time and space to think. It does not expect initial answers to remain untouched: students have many opportunities to change their minds, make mistakes, and re-write their answers as they are being confronted to other students’ viewpoints.

Answers are anonymous. This frees students from any premature judgement when reviewing: they have to look at each solution with the same fresh eye and attention. All opinions are legitimate.

This anonymity also gives the teaching staff the possibility to suggest answers without being detected. They would typically write persuasive, convincing yet wrong explanations, to see the class reaction. This way, students must remain constantly alert and skeptical; no opinion, as cogent and attractive as they might sound, should be accepted as granted.

A proof of concept was successfully developed. Implementation and extensive testing within the Imperial College London’s Department of Mechanical Engineering was planned, but it has been postponed due to time constraints.